Quad-Bayer Sensors - Impact on Image Quality and Photogrammetry

Lukas

Zmejevskis

Lukas

Zmejevskis

Quad bayer image sensor tech is quite common but not necessarily understood by consumers. We often mention that a drone or a smartphone camera has a quad-bayer sensor, so its stated resolution is not "real." In this article, we will go a bit deeper into the technology and share our latest findings on quad-bayer camera image quality and its effect on photogrammetry.

RGB Color Model

An imaging sensor converts light into digital signals that can later be interpreted by the imaging pipeline and displayed or printed. A sensor can be sensitive to more than visible light, such as infrared—what thermal cameras see. But for the purpose of this article, we are only talking about gathering visible light to make photos representative of what a human eye sees.

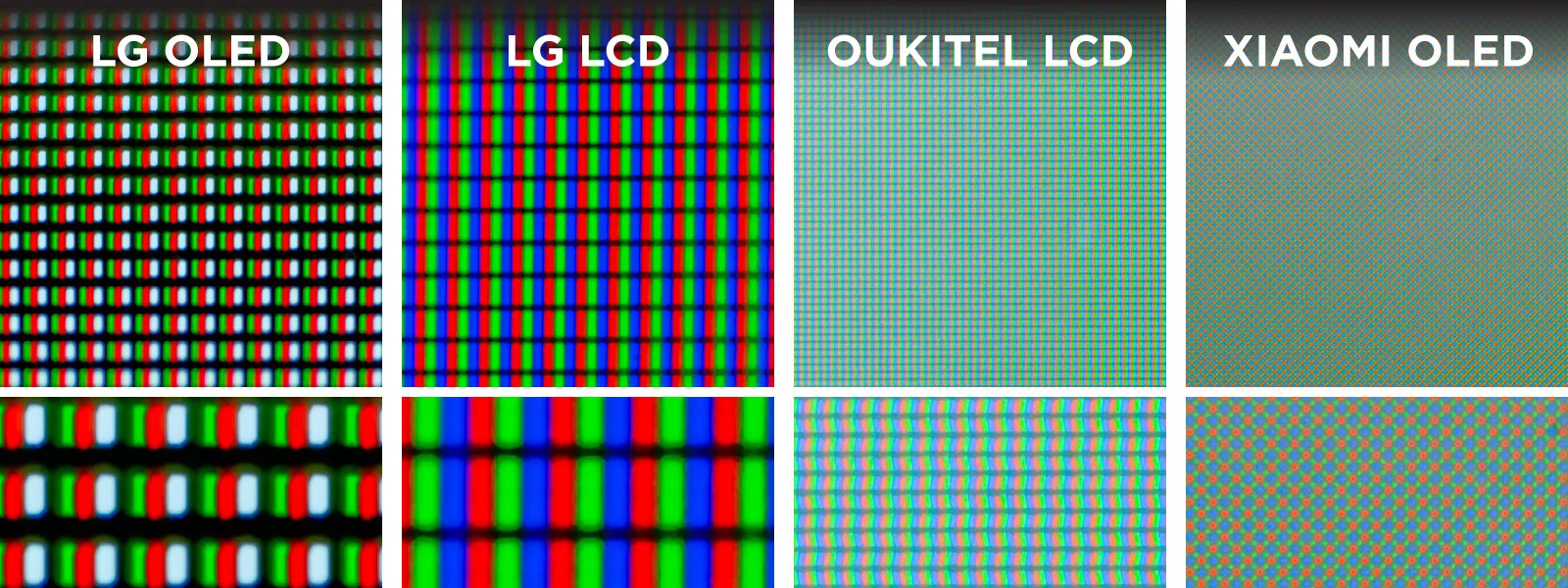

The RGB color model, which divides light into three primary colors, is based on human vision and serves as a basis for most light-gathering and emission devices that we make. For this reason, any LCD or OLED screen has RGB light emission points to create colors and, in turn, the final image. Below are a few macro photos taken on different screens.

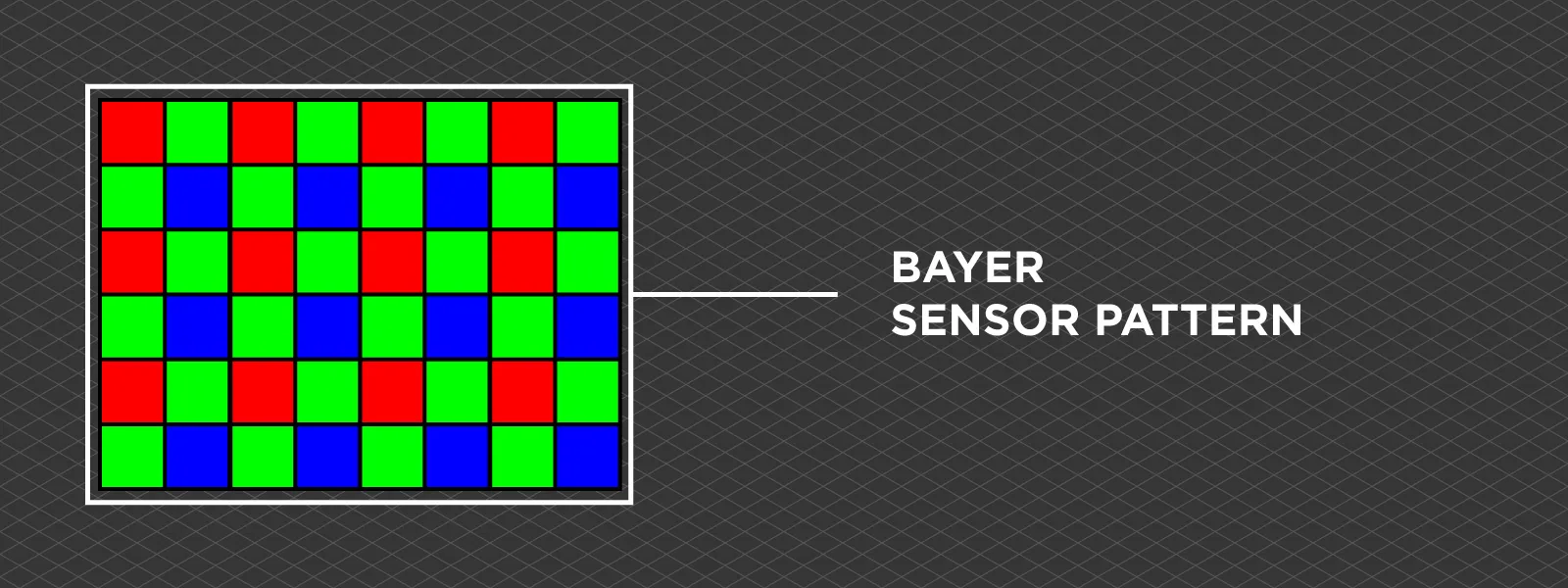

The same applies to light-gathering devices - image sensors. Each sensor has a photosite sensitive to red, green, or blue light, achieved by filtering the oncoming light. There are other ways of achieving full-color information. Still, a color filter and a Bayer pattern sensor are the most common ones. Below is an illustration of a bayer pattern on a typical CMOS technology sensor.

The same applies to light-gathering devices - image sensors. Each sensor has a photosite sensitive to red, green, or blue light, achieved by filtering the oncoming light. There are other ways of achieving full-color information. Still, a color filter and a Bayer pattern sensor are the most common ones. Below is an illustration of a bayer pattern on a typical CMOS technology sensor.

Bayer vs. Quad Bayer Pattern

As you can see, we have a pattern of green, red, and blue light-sensitive pixels in a pattern which favors the green color. Green is favored in digital devices because the human eye is more receptive to green light, and gathering more green information allows it to produce better light-intensity representation. So, this is the standard Bayer filter invented by Bryce Bayer in 1976. A 2 by 2 square of 4 green-red-green-blue photosites make up an image element we call a pixel.

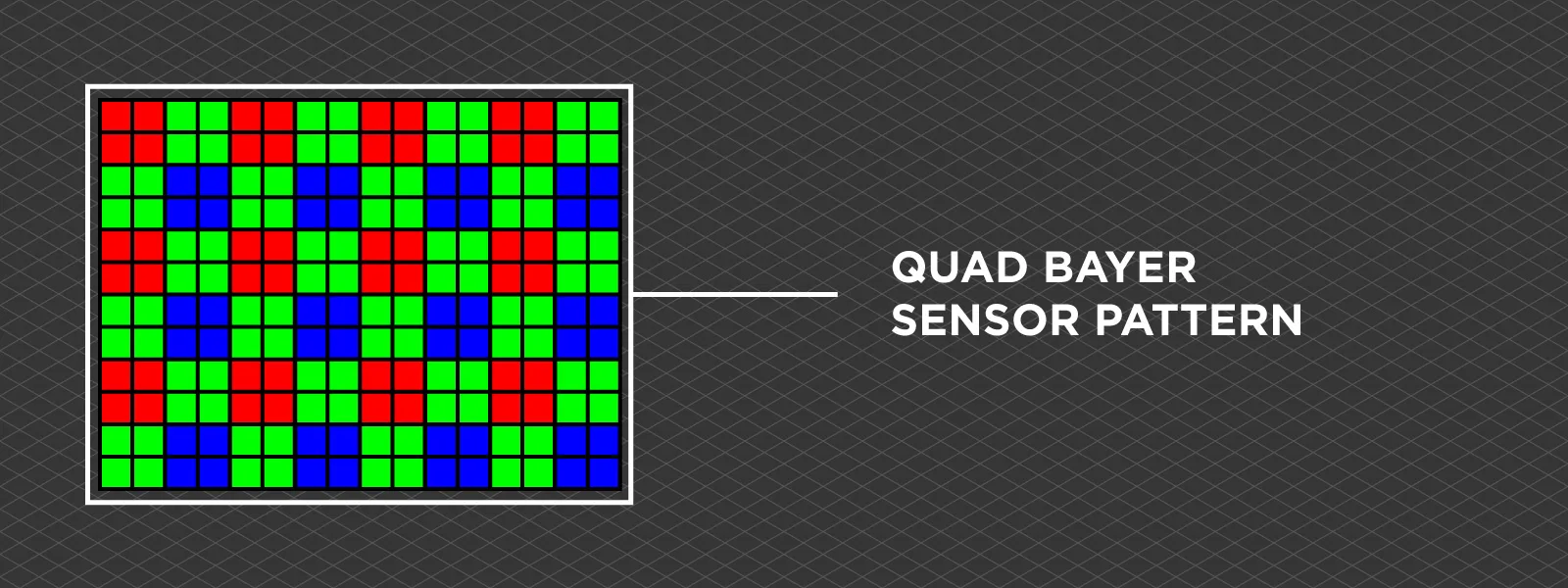

![]() A quad-bayer is a very similar pattern, but each RGB photo site is split into four (or even 9) segments. This splitting allows for a few features to be enabled on the sensor, such as better autofocus or single-shot HDR capture. Still, it also artificially inflates the supposed megapixel amount, which was picked up by marketing. This is why we see ridiculous claims, such as phones with 200-megapixel sensors and "lossless" digital zoom.

A quad-bayer is a very similar pattern, but each RGB photo site is split into four (or even 9) segments. This splitting allows for a few features to be enabled on the sensor, such as better autofocus or single-shot HDR capture. Still, it also artificially inflates the supposed megapixel amount, which was picked up by marketing. This is why we see ridiculous claims, such as phones with 200-megapixel sensors and "lossless" digital zoom.

Splitting a single photo site, four comprising a single pixel does not mean the pixel amount has increased conventionally. The same amount of light and color information is gathered whether a photosite is split into many or not. However, manufacturers also allow shooting in these high-resolution modes despite having the same number of actual pixels. A question naturally arises—where is the data coming from, and do we see actual detail differences?

Splitting a single photo site, four comprising a single pixel does not mean the pixel amount has increased conventionally. The same amount of light and color information is gathered whether a photosite is split into many or not. However, manufacturers also allow shooting in these high-resolution modes despite having the same number of actual pixels. A question naturally arises—where is the data coming from, and do we see actual detail differences?

12 vs 48 Megapixels

We have tested quite a few quad-bayer sensor-equipped cameras throughout the existence of our blog. We also do a lot of drone testing, and two of the best and most popular drones on the market come with the same quad-bayer sensor: the DJI Mini 4 Pro. This sensor is a second-generation sensor with improved processing capabilities compared to the DJI Mini 3, which had it first.

These 12-megapixel sensors with photosites split into 4 allow marketing to use 48-megapixel values. But we can shoot 48-megapixel raw photos, and we see a difference between the 12 and 48-megapixel shots. How is this possible?

These 12-megapixel sensors with photosites split into 4 allow marketing to use 48-megapixel values. But we can shoot 48-megapixel raw photos, and we see a difference between the 12 and 48-megapixel shots. How is this possible?

To accurately represent the natural world, algorithms must convert the Bayer pattern data into actual colors and luminance in pixels. Such an algorithm is called debayering or demosaicing. So, for the quad-bayer sensor, the algorithm adjusts. Because it has to create 48 megapixels worth of data—which is usually used for 12-megapixel photo creation—there may be some fundamental differences in how an image turns out.

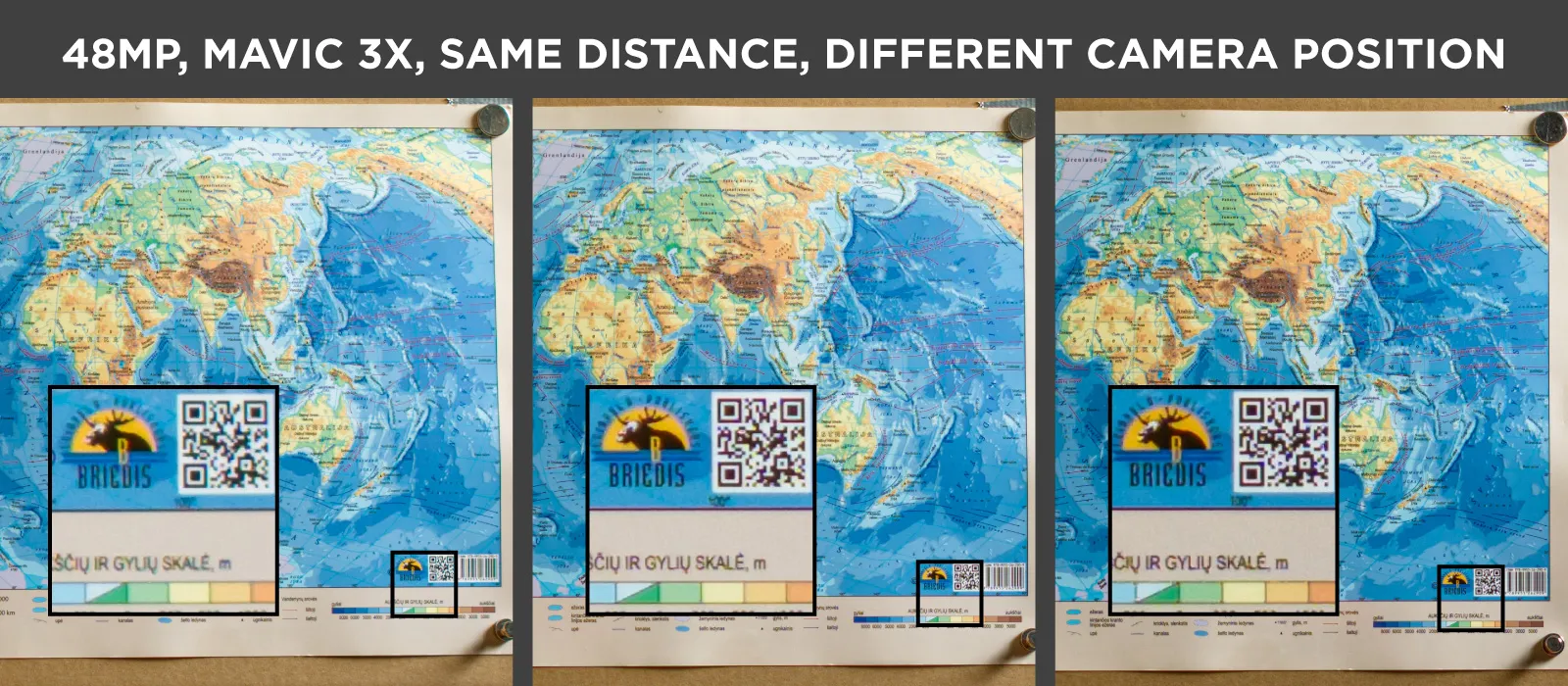

We made a few examples to evaluate how much detail we gain when shooting in 48-megapixel mode on the DJI Mini 4 Pro and using the 3X camera of the Mavic 3 Pro (which has the same sensor). The subject is a printed map shot in a controlled environment, and we are zoomed in way past 100 percent on both examples.

![]() We shot raw and processed the photos neutrally to maximize the detail and quality. The final exports are high-quality jpegs zoomed in heavily on the map, which takes up less than one-third of the frame. However due to framing differences the pairs of examples ar not comparable between each other. Evaluate only the different resolution results from the same camera.

We shot raw and processed the photos neutrally to maximize the detail and quality. The final exports are high-quality jpegs zoomed in heavily on the map, which takes up less than one-third of the frame. However due to framing differences the pairs of examples ar not comparable between each other. Evaluate only the different resolution results from the same camera.![]()

Photogrammetry Experience

In our previous article, we did a grid flight using the DJI Mini 4 Pro and Pixpro waypoints service. Then, we repeated the same flight with only one change—we switched to shooting in 48-megapixel resolution.

We initially had trouble processing the 48-megapixel image set. Depending on which reconstruction algorithm we chose, the processing failed to include all photos in the 3D reconstruction. At the same time, there were no such issues with the 12-megapixel image set.

We are not quite sure yet, but seeing as the shooting resolution was the only thing that changed between the two examples, we naturally suspect that the debayering algorithm may have something to do with it. Photogrammetry works by matching features between overlapping photos; if there is an issue with those features - 3D reconstruction may fail.

We are not quite sure yet, but seeing as the shooting resolution was the only thing that changed between the two examples, we naturally suspect that the debayering algorithm may have something to do with it. Photogrammetry works by matching features between overlapping photos; if there is an issue with those features - 3D reconstruction may fail.

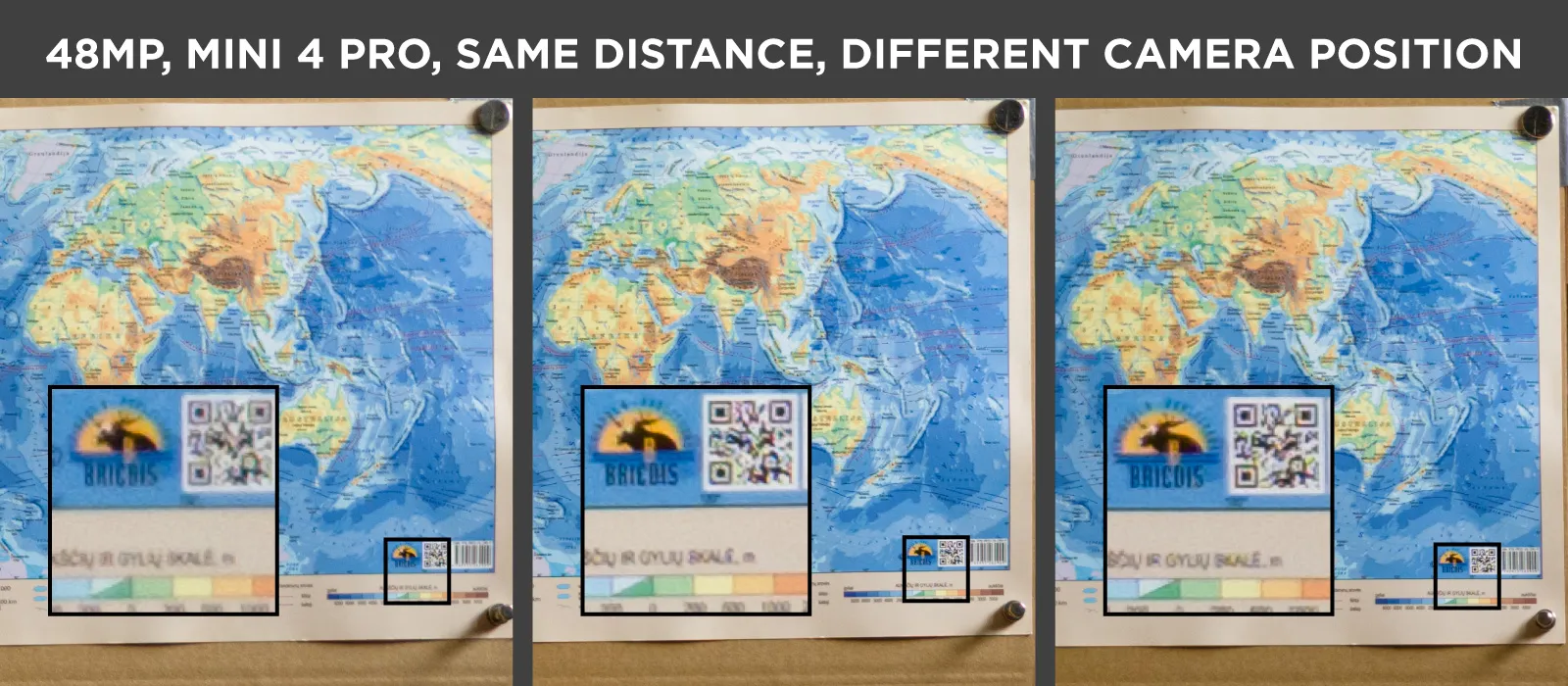

We took three photos of the same map, but the map is in three different positions in the frame. This represents doing a photogrammetric scan with overlapping pictures. Here, we are looking for an inconsistency in the same subject between the images.

We took three photos of the same map, but the map is in three different positions in the frame. This represents doing a photogrammetric scan with overlapping pictures. Here, we are looking for an inconsistency in the same subject between the images.

Conclusion

There are some slight rendering differences between higher-resolution photos, which may contribute to photogrammetric inconsistency. Still, we did not notice any significant flaws in the algorithm. Higher-resolution images provide a little more detail for a price. Files are larger, the lens's optical flaws are exaggerated, and the camera operation is slower. If these downsides are worth the slightly better detail, shoot RAW in the higher resolution, and you will get the maximum from your camera.

Photographer - Drone Pilot - Photogrammetrist. Years of experience in gathering data for photogrammetry projects, client support and consultations, software testing, and working with development and marketing teams. Feel free to contact me via Pixpro Discord or email (l.zmejevskis@pix-pro.com) if you have any questions about our blog.

Related Blog Posts

Our Related Posts

All of our tools and technologies are designed, modified and updated keeping your needs in mind

Pixpro Waypoints v2 - Orbitals and Height Offset for DJI Drones

Pixpro Waypoints is a service for consumer DJI drone users that provides consistent and automated flying for aerial photogrammetry purposes. For now, we had the essential single grid flight only, which is the bread and butter for making orthophoto images and terrain scans.

Multi-battery Scans with Pixpro Waypoints

Pixpro waypoint service enables us to do grid flights with the best consumer-grade drones on the market. It is based on the current waypoint functionality, which has a limit of 101 waypoints. With a grid flight, such a limit means little.

DJI Mini 4 Pro Photogrammetry - Pixpro Waypoints

DJI Mini 4 Pro is the best drone, weighing in under 250 grams, and thus, it avoids a lot of regulations that may be bothersome for some. However, it is also an excellent tool for professionals, many of whom may be interested in aerial photogrammetry.

Ready to get started with your project?

You can choose from our three different plans or ask for a custom solution where you can process as many photos as you like!

Free 14-day trial. Cancel any time.

.svg@webp)